In a recent provocative essay, Blaise Agüera y Arcas and Peter Norvig (from Google and Stanford respectively) assert “Today’s most advanced AI models have many flaws, but decades from now, they will be recognized as the first true examples of artificial general intelligence.” Specifically, they are referring to large language models like ChatGPT, Bard, LLaMA and Claude.

What should a skeptic think about the claim that ChatGPT is an AGI?

If current large language models are not AGIs then what are they? The alternative is “narrow” AI. Narrow AI is a neural network trained to determine, for example, whether or not a photograph contains a cat. Presumably AI lies on a spectrum, with dedicated object classifiers on one side and superhuman AGI on the other. Therefore, the question is not if ChatGPT is (or is not) an AGI, the question is where does it lie on the AI spectrum? And, if we must, where do we (arbitrarily) draw the line between narrow and general AI?

So I asked ChatGPT, “Are you an AGI?” It responded, “No, I am not an AGI (Artificial General Intelligence). I am a language model based on the GPT-3.5 architecture developed by OpenAI. I am a specific, narrow AI model designed for natural language processing and generation. While I can understand and generate text based on the input I receive, I don’t possess the broad spectrum of intelligence and capabilities associated with AGI, such as human-like understanding, consciousness, or general problem-solving abilities. I’m a tool that processes and generates text based on patterns in the data I was trained on.”

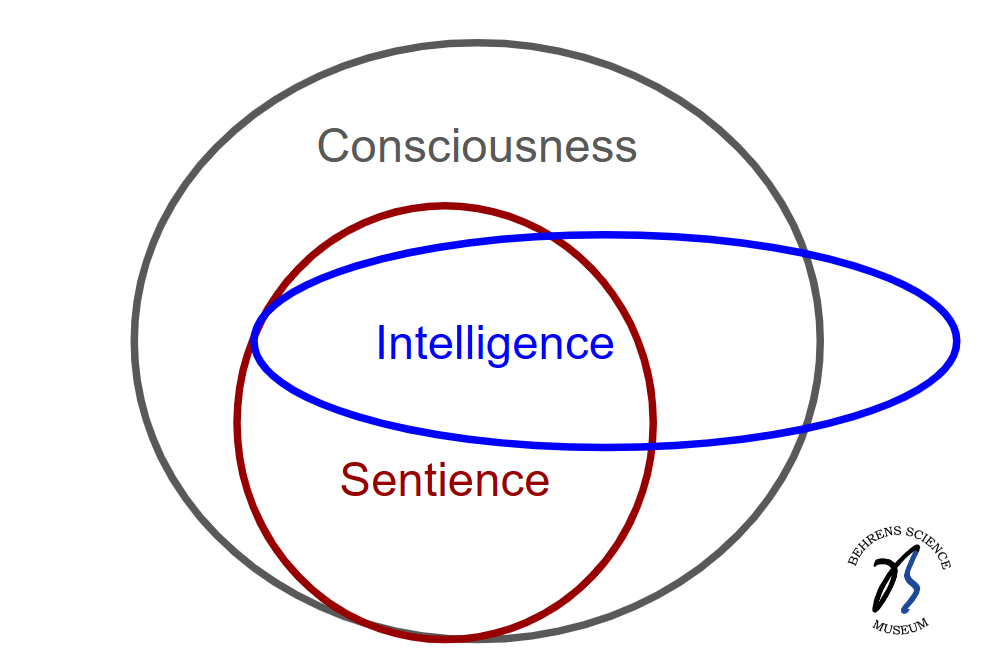

Note that ChatGPT brought up the idea of consciousness in asserting that it is not an AGI. Consciousness and sentience are closely related to intelligence. Sentience is the ability to feel things like pain and emotions. It is generally considered to be one aspect of the broader concept of consciousness. In principle, it is possible to be conscious without being sentient (think Data in Star Trek the Next Generation), but sentience suggests conscious experience (see the Venn diagram above). Consciousness also lies on a spectrum with “higher order” consciousness extending beyond wakefulness to include self-awareness and introspection.

Is it possible to be intelligent without being conscious? We have no examples of intelligence without consciousness, but that doesn’t mean that it’s impossible. ChatGPT, when asked if it is conscious, responded, “No, I am not conscious. I am a machine learning model created by OpenAI, and I lack subjective awareness, emotions, or consciousness. I generate responses based on patterns in the data I was trained on and the input I receive, but I don’t have personal experiences or self-awareness. I provide information and answer questions to the best of my knowledge and abilities, but my responses are based on algorithms and data, not on conscious thought or awareness.”

Unfortunately, we have no way to test ChatGPT’s claim. It says that it’s not conscious (or an AGI) because that’s how it was trained. Of course, if it said the opposite, it would also be due to its training. We need some objective method to test the claim. Even for other human beings, we assume that they have the same conscious experience as we do, but we can’t be certain. The best we can do is to show that we all have similar biological responses to stimuli (brain activity, for example).

Until recently, the ability to communicate through complex language was considered uniquely human. That is no longer the case. According to ChatGPT, “I analyze the context of the conversation and try to predict the next word or phrase that is most likely to follow, based on the input provided.” So ChatGPT is just a probabilistic word or phrase generator. It’s just an algorithm, right? The problem is that I can say the same thing about myself. Given my lifetime language “training,” and the context of this essay, I think this sentence is the most likely next sentence. My own ability to generate a coherent sentence is just as much of a black box to me as is ChatGPT’s ability to do the same.

As skeptics, we assume that consciousness emerges from the structure of the brain. There is no supernatural “ghost in the machine” that makes us conscious. But if consciousness is a property that emerges from the machinery of the brain, couldn’t it also emerge from the machinery of a computer? I think it could, but as long as no test of machine consciousness exists, the claim will remain unfalsifiable and therefore beyond the scope of science.

Bruce Behrens